State-of-the-"art" - machine learning for graphics and UI designs

Before starting my summer internship at Adobe Research last year, I read several papers about machine learning for design. While none of my intern projects focused on this, I found the research fascinating, so at the HCIL symposium 2022, I gave a 1-hour talk on the research progress of ML for graphics and UI design. Inspired by one of the attendees — Prof. Hal Daumé III’s Tweetstorm — I wrote this post to share a skimmable version of the talk.

Talk format:

I focus on two topics: “find design examples/inspirations” and “generate layout automatically” via an informal literature review of selected papers published at top-tier machine learning/human-computer interaction conferences/journals,

The symposium talk was hybrid: ~40 attendees joined the talk, and half joined virtually; some were designers or students in design-related majors, and some are ML/HCI researchers. Thanks to Slido, I collected primary data about audiences’ preferences on features enabled by machine learning/data-driven methods.

Table of contents:

Part 1: Find design examples/inspirations

- by Cascading Style Sheets (CSS) properties

- by descriptive words

- by visual properties

- by images

- by a chatbot

Part 2: Generate layout automatically

- by constraints

- by “energies” (design guidelines)

- by keywords/categories

- by spatial relations with background and embellishments

Part 1: Find design examples/inspirations

Motivation: Designers routinely seek inspiration. Findings from a study with 24 UI/UX designers found that most searched keywords on Google, Behance, Pinterest, etc., and collected search results in different aspects: layout variations (N=22), font styles (N=14), color palettes (N=11).

Pain points:

- It’s difficult to find the right textual description to search for

- It’s time-consuming to find good examples

- Search results do not meet the design requirement

How can machine learning/data-driven methods help?

1.1 search by Cascading Style Sheets (CSS) properties

Cascading Style Sheets (CSS) is a style sheet language decorating web content. Users can choose any part on a webpage and inspect information about font family/size, color, padding, etc. (Read about how to do it.)

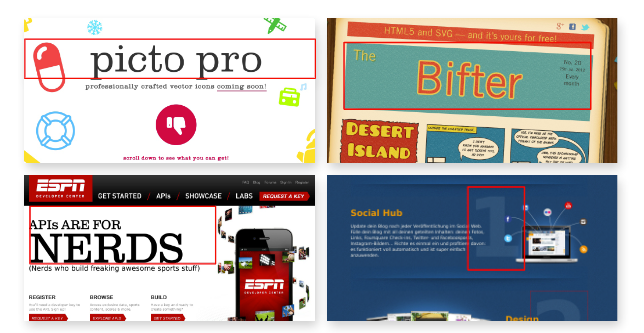

Researchers downloaded 100k+ web pages and built a giant database. Users can search design examples in the database by CSS properties, e.g., font size, widget position, and global page layout. Here are some search results:

- designs with font size > 100px

- designs with the search bar in the middle of a page:

- designs for global layout given a layout query (leftmost)

What attendees said:

One of the attendees expressed concerns about searching by CSS properties, as the same widget could be named differently across webpages (e.g., carousel vs. slider).

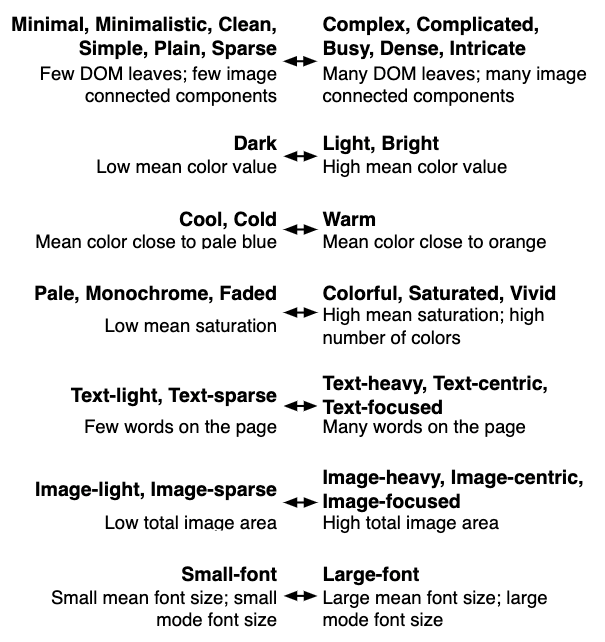

1.2 Search by descriptive words

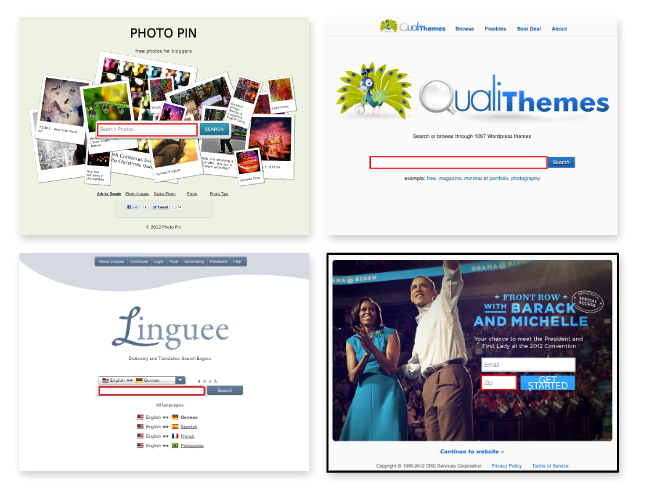

Researchers have also mapped descriptive words with Document Object Model (DOM) and CSS properties. Users can type descriptive words directly; after translation, the search engine will retrieve results based on the definition of CSS properties.

What attendees said:

Designers in attendance shared keywords they usually use:

minimal/minimalism/minimalistic

neat/clean/simple/easy

colorful/bright

cheerful/fun

dark

1.3 Retrieve images by visual properties

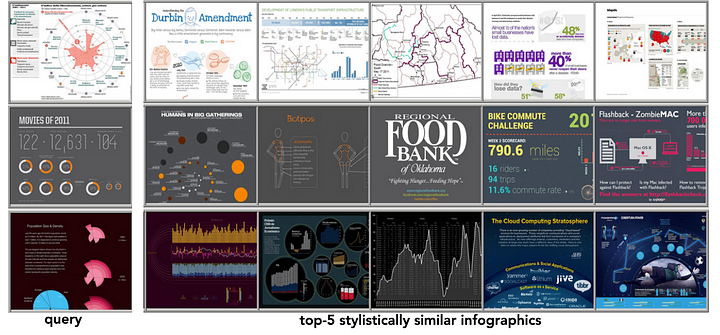

In the pre-deep-learning era, retrieving similar images by visual properties achieved reasonably good results. For example, by combining color histogram and Histogram of Oriented Gradients (HOG), researchers have found stylistically similar infographics.

1.4 Retrieve images by image embeddings

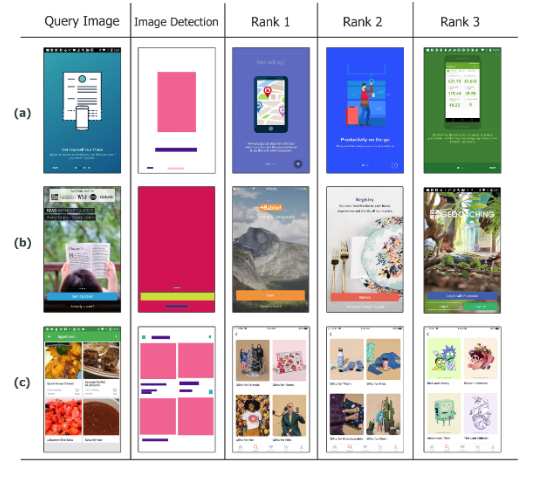

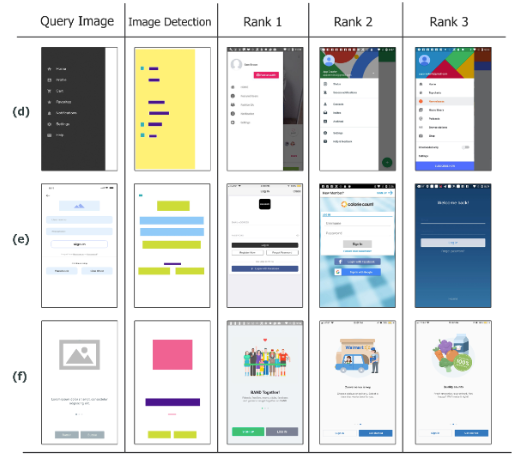

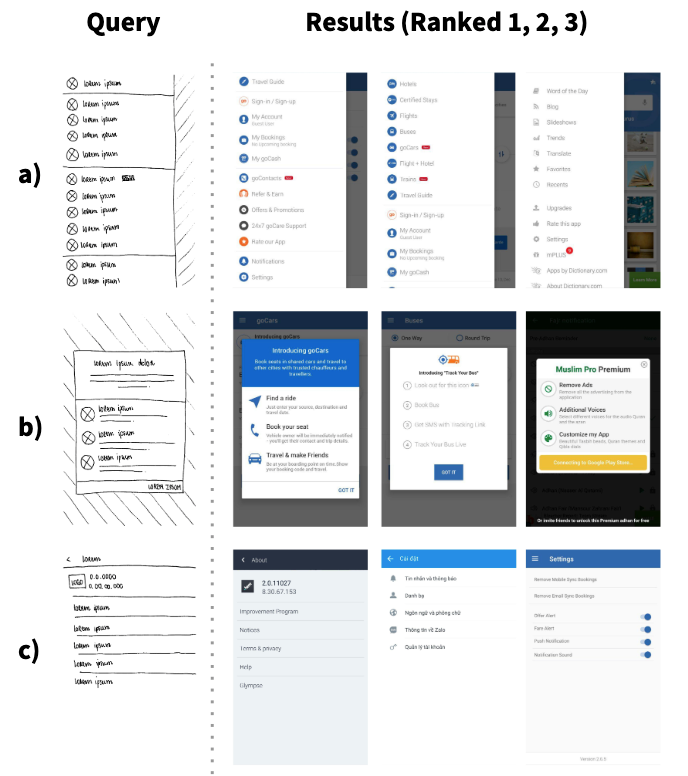

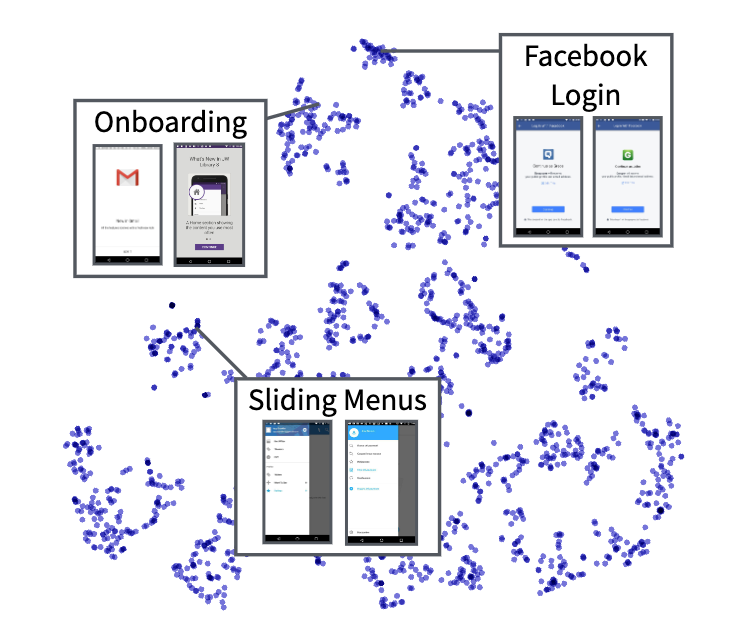

Neural network-based methods allow users to search for similar UIs by high-fidelity screenshots, wireframes, sketches, and even partial sketches.

Search similar UI designs by high-fidelity screenshots.

Search similar UI designs by wireframes.

Search similar UI designs by sketches

Although the above methods take different inputs, they adopt the same approach: learning image embeddings (a low-dimensional vector representation) and performing nearest neighbor searches.

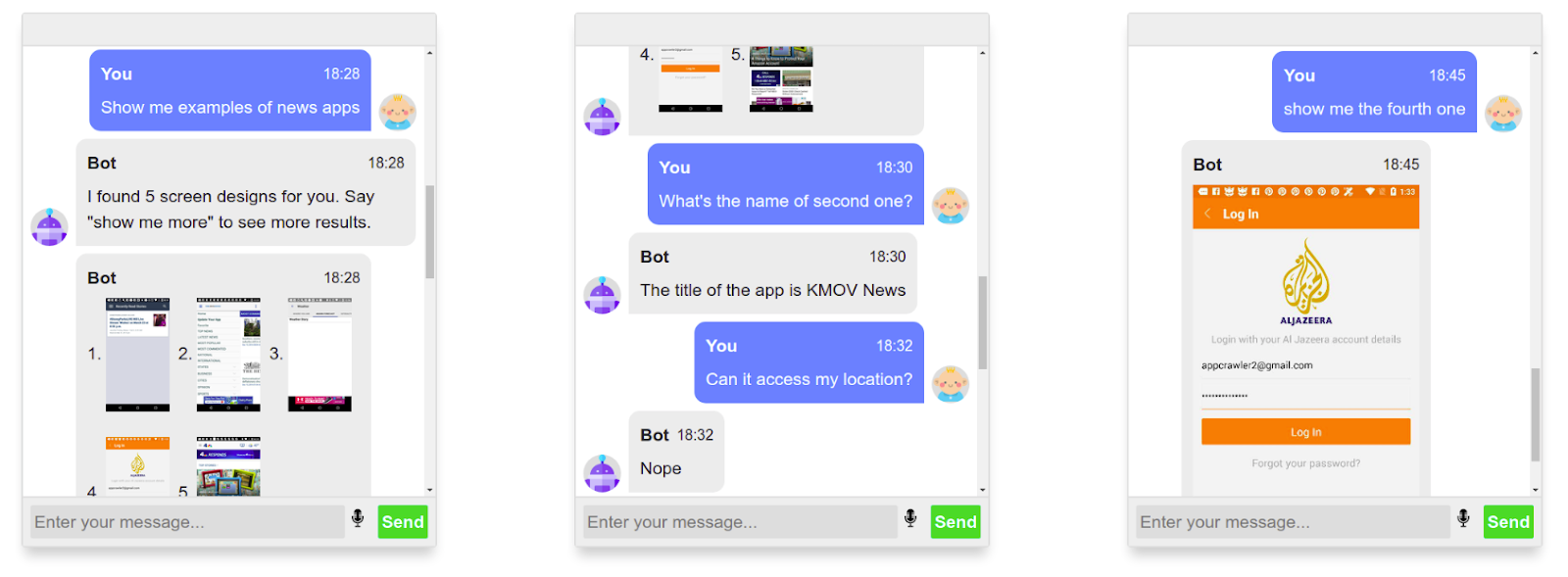

1.5 Retrieve images by chatbots

Chatbots could help users find design examples in a database via conversations.

An example chatbot that helps users retrieve UI examples

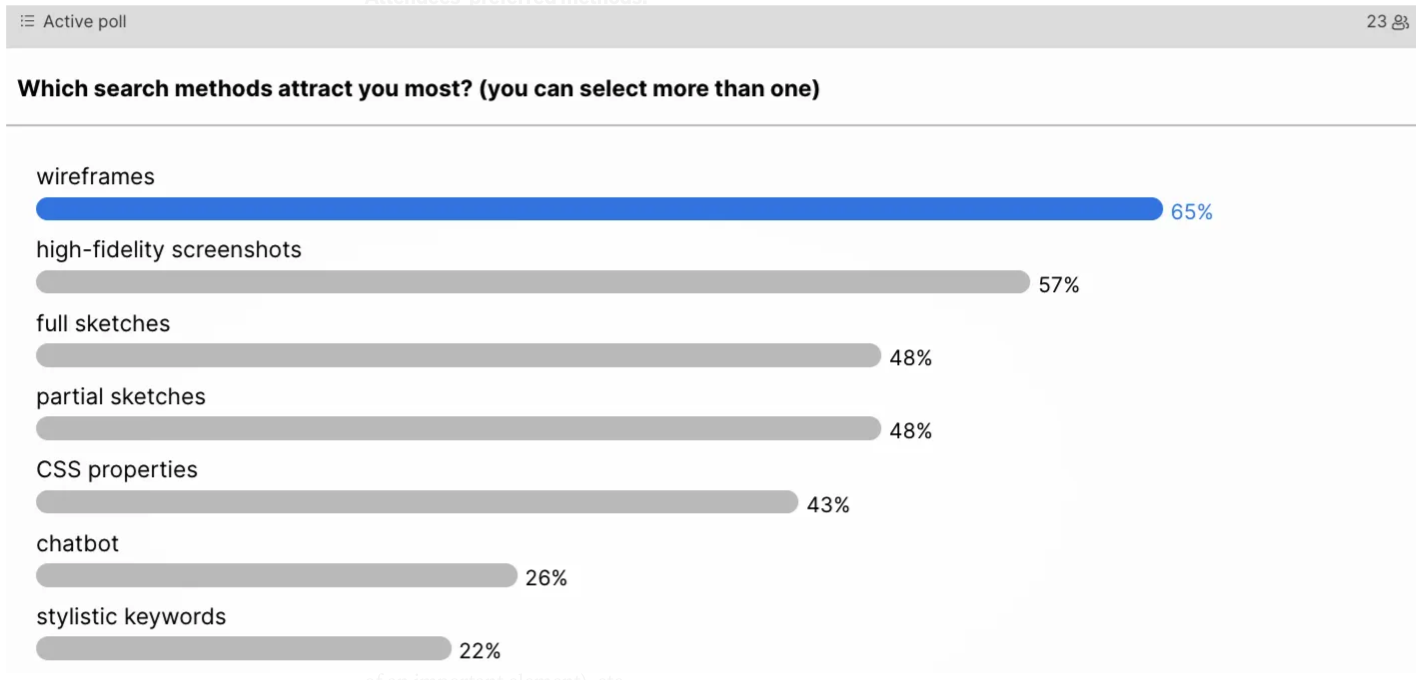

What attendees said:

23 attendees voted for their preferred methods to find design examples/inspirations (note: multiple selections were allowed; see figure below). Searching by wireframes was the most popular (65%), followed by high-fidelity screenshots (57%), while searching by chatbot (26%) and stylistic keywords (22%) were less popular. This is likely because designers found keyword-based search results to be too broad.

Part 2: Generate layout automatically

Motivation: When seeking inspiration, most designers (22 out of 24) collect layout-related examples. If machine learning could automate the layout creation process, that would be a great time saver. Below I discuss options for doing this.

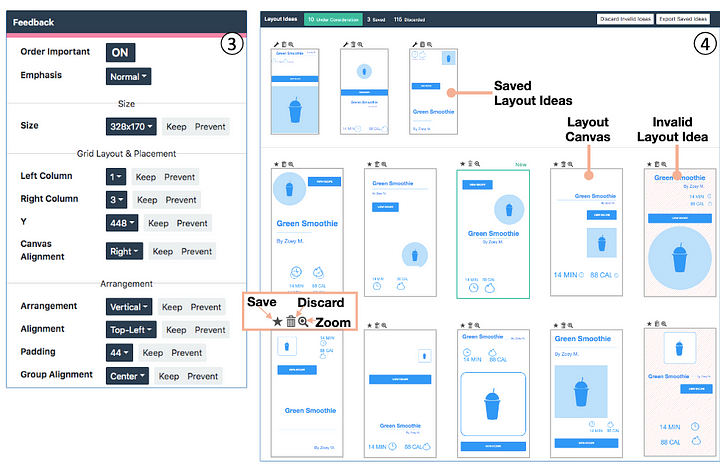

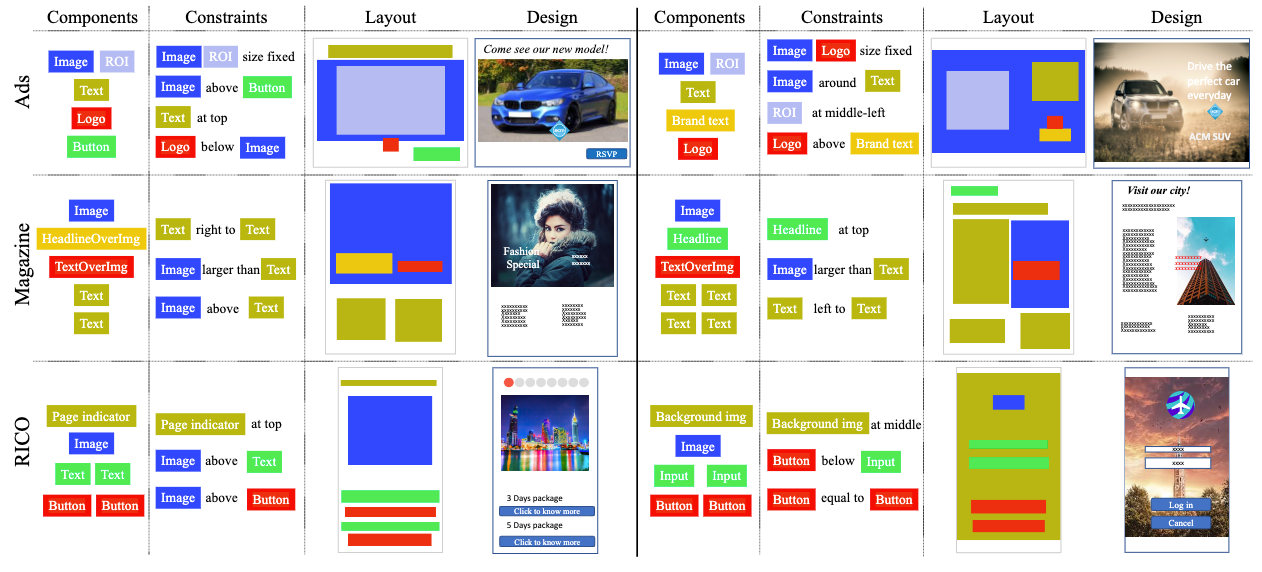

2.1 Generate layout based on constraints

Scout generates feasible layouts given constraints specified by users. Constraints could be non-overlapping, minimal sizes, alignment, visual hierarchy (paddings within groups), emphasis (can’t decrease the size of an important element), etc.

What attendees said:

Designers shared typical constraints they encountered, including:

information hierarchy

branding requirements

headers and footers

2.2 Generate layout based on “energies” (design guidelines)

DesignScape generates layouts by minimizing design guideline violations (“energies”). Design guideline violations include alignment violations, balance violations, overlap, etc.

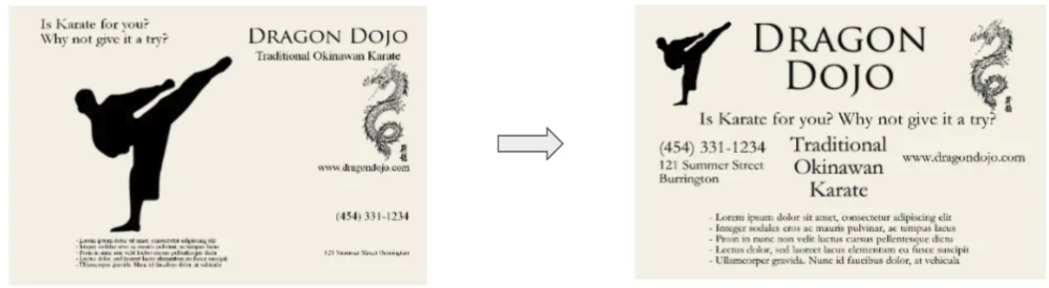

Automatic Generation of Visual-Textual Presentation Layout

2.3 Generate layout based on keywords/categories

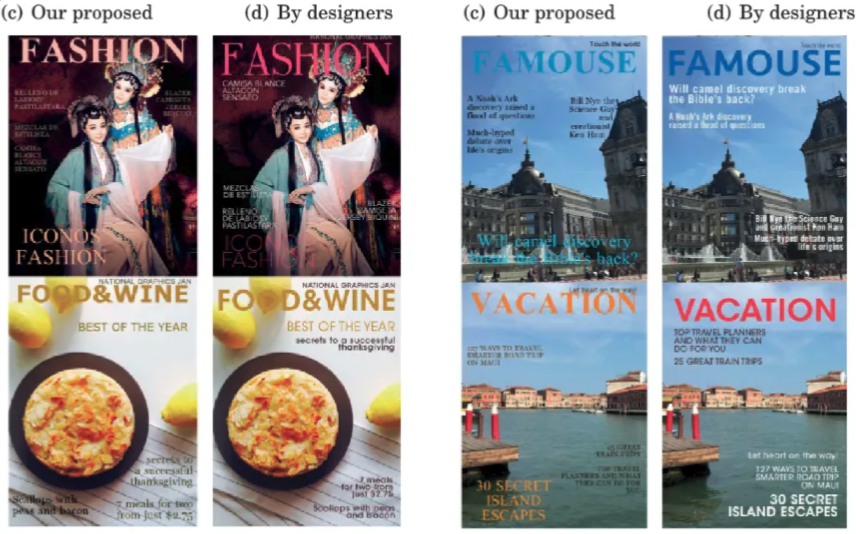

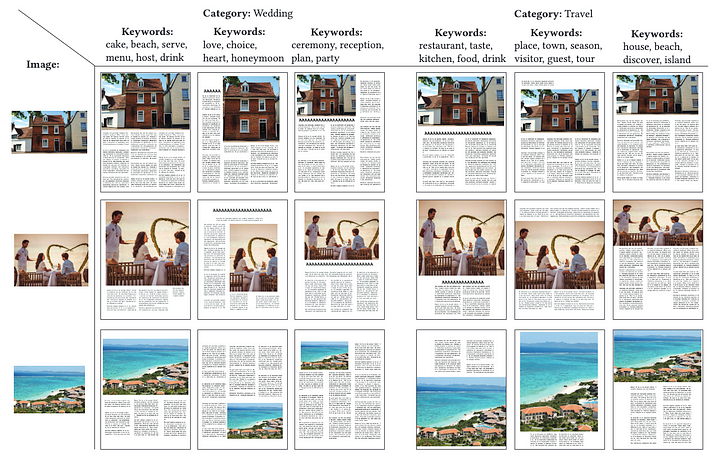

Researchers have trained Generative Adversarial Networks (GAN) to generate magazine layouts based on images, text, and magazine categories. Below are different magazine layouts generated for the same image but with different keywords.

2.4 Generate layout based on spatial relations

Researchers have also modeled spatial relations among objects like graphs and trained Graph Convolutional Networks (GCN) to generate designs satisfying such relations.

2.5 Generate layout with background and embellishments

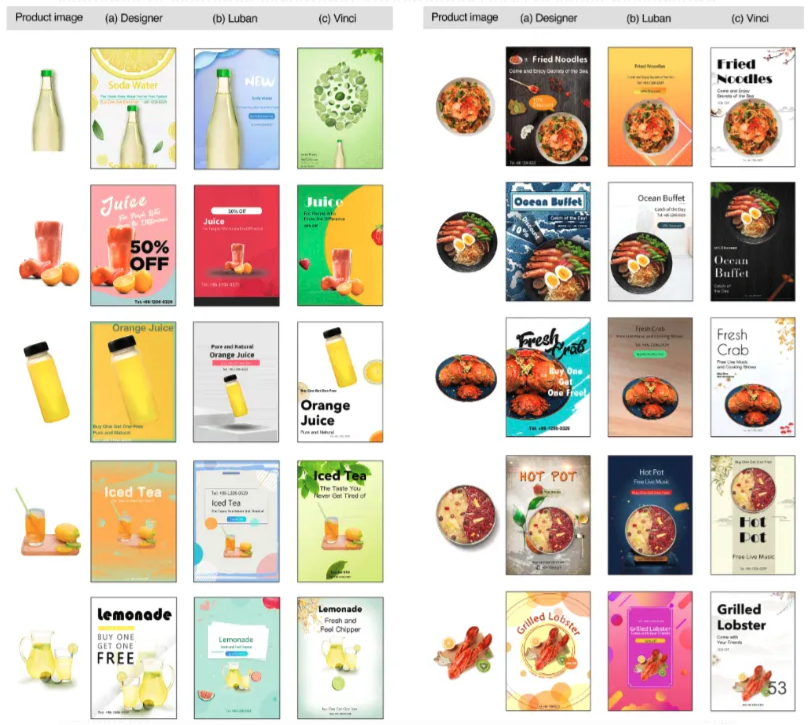

Most ML methods generate layouts using only given assets. A prototype called Vinci could suggest background and embellishments. Vinci views the design process as linear design sequences and leverages a sequence-to-sequence Variational Autoencoder (VAE) to select background and embellishments.

What attendees said:

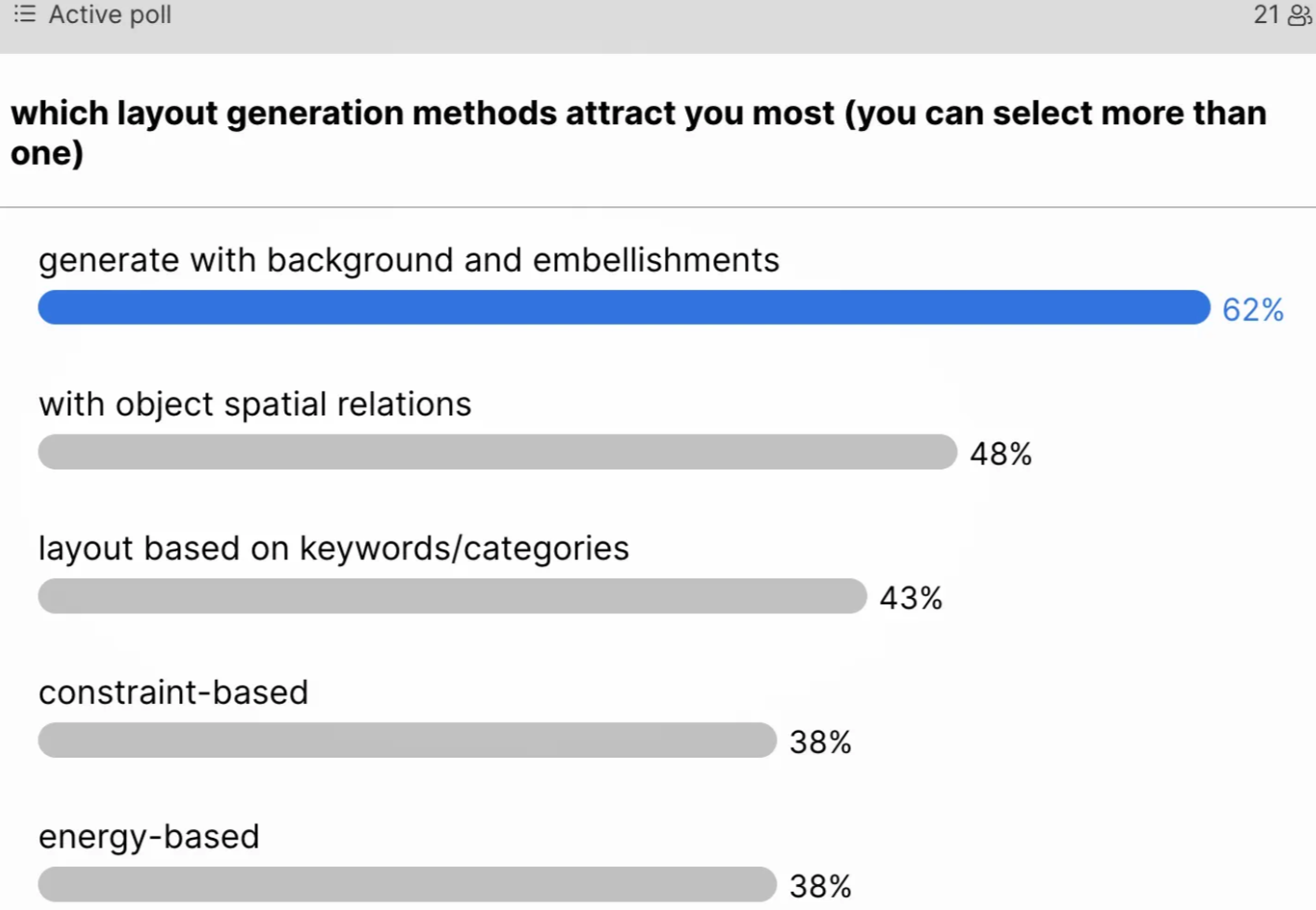

21 attendees voted for their favorite layout generation methods. Vinci garnered the most votes (62%), followed by spatial relations (48%). Full results are below:

Takeaways:

- Some important ML techniques don’t lead to popular features, e.g., search design examples by chatbots (note: the talk was given before ChatGPT released).

- The same ML component could lead to features with varied popularity, e.g., searching by wireframe is more popular than searching by high-fidelity/sketches.

- Designers are eager to adopt such techniques! One designer even asked whether it’s possible to download one of the “tools.” Unfortunately, it’s only research code: no server, no front-end 🤷♀

Further reading:

Some papers don’t fall into the above two topics but are still interesting to read: